Tangible and Embodied Interaction (2019) – Week 4

In the previous course we came into contact with artificial intelligence and although I find it very interesting, I did not enjoy the topic that much in the end because it was rather complex. This week, however, we will play with input and output through Processing. I already have some experience with processing from previous years and it gave me a lot of pleasure. According to Daniel, we will work with generating sounds, of which I am a big fan. I play around with Fruity Loops, a digital audio workstation, quite often and I am curious to see how we can generate sounds through machine learning.

Introduction & Literature (2nd of December)

The purpose of this week was to explore AI technologies through tinkering and discussion. We were to work with Wekinator, a machine learning software that allows one to build creative systems such as musical instruments. Input and output could be created through the use of for example Processing and Arduino. I was pleased that this week was central to exploring and learning from failure, because it allowed me to take risks.

The lecture kind of repeated our insights on machine learning from the previous course. A downfall of computers is that it cannot interpret the context of a situation like we humans do. By giving a computer experiential, it can learn to understand fuzzy logic, like for example recognizing a face based on certain data. In the previous course we created input through an accelerometer and gyroscope based on movement interaction. We trained the machine to recognize movements, but did not really experiment with the output. This course, however, was intended to train the machine on both input and output.

Compared to the previous three weeks, I found the literature to be very complex and less interesting. I was keen to get started experimenting. What I find very interesting in the article “Sensori Motor Learning with Movement Sonification: Perspectives from Recent Interdisciplinary Studies (Bevilacqua et al., 2016) ” was the combination of movement-oriented and sound-oriented tasks. The authors conducted an experiment in which sounds were used to specify a movement (i.e., performing a movement while being guided by sound feedback).

I think it would be interesting to use this combination in sports such as football. For example, I can imagine that the training of passing a ball with a certain speed can be improved by means of sound.

In addition, I see potential in the use of sound feedback when performing dance movements. For example, sound can not only be used to perform dance movements, but dance movements can also provide sound feedback, which reverses the complete concept. The authors did however mention that it is likely that people exhibit different audio-motor abilities, which could become problematic in sports where preciseness is key.

The second article “Kontrol: Hand Gesture Recognition for Music and Dance Interaction (Christopher et al., 2013)” was a little more inspirational to me. In fact, it was ultimately the inspiration for one of the experiments that follows later. According to the authors, hand gestures are an important part of our communication; Hand gestures can strengthen our speech and represent our emotions, also while playing a musical instrument. By using their tool (i.e., Kontrol) as an extension of one’s arm, they were able to use this form of communication as an input for an electronic output. This gave me the idea of using hand gestures for playing ‘air’ instruments, completely separate from a musical instrument. A bit like conducting a choir, but instead playing an instrument ourselves. I saw it as a tool with which we could make the air guitar a real instrument.

The last article that I read “Sansa: A Modified Sansula for Extended Compositional Techniques Using Machine Learning (Macionis et al., 2018) actually build a little on the inspiration I gathered from the previous article. The authors extended an acoustic instrument to enhance it’s possibilities and enable a musician to have more complex interactions with different media. I think this concept can be used to improve an acoustic instrument for electronic music related purposes, where one instrument can be used as an input for many different outputs.

The downfall of this, in contrast with the Kontrol tool from the previous article, is that the technology must be attached to the instrument which causes disruptions in the way the instrument is usually played. Due to the many challenges that the authors had formulated at the end, I am not yet convinced that this is a useful tool.

List of notes about the topic

- The combination of movement-oriented and sound-oriented tasks can be valuable while thinking in terms of machine learning.

- By using tools as an extension of the human body or an instrument, we are able to have an more complex interactions through multiple types of media.

Exploration & Experimentation (3rd of December)

The Tuesday lecture was meant to introduce and experiment with AI and to setup the Wekinator software. After playing with some of the Google AI experiments, I concluded that one of the ideas that came forth while reading the articles has already been implemented in short, but rather specific. The Semi-Conductor enables one to conduct an orchestra through hand gestures, with the use of computer vision. The song that played was however predefined.

Unfortunately I forgot to record my own results from the Google AI experiments, thus decided to enhance this section through the provided YouTube videos that come with the experiments.

One other project that caught my attention was the Quick, Draw! experiment. I think it is very interesting to see how machine learning can guess images that are being drawn, based of off input from millions of people. It was actually very accurate in guessing my drawings and I am surprised by it’s capabilities. However, it was less in line with what I had in mind for this week.

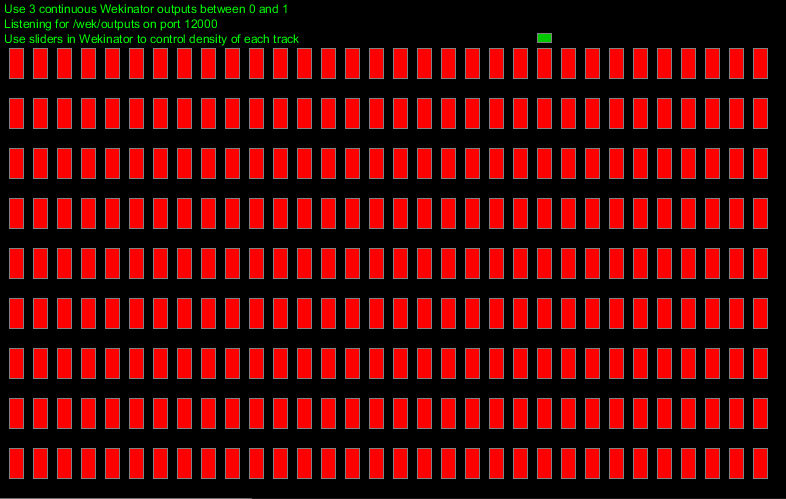

After playing around with different experiments we set-up the Wekinator software. I tinkered a bit with the different input and output examples and I started wondering if Fruity Loops could be used as an output source. After a little research I concluded that this may not have been the case, or at least not for this week. I started thinking, what kind of aspect of fruity loops would I like to recreate and use as a source of output? I immediately thought of using the Processing drum machine that came with the output example folder.

Each bar represents a beat and the output is constantly being looped.

The drum machine has some similarities with the Fruity Loops’s playlist and piano roll, in which different sounds or notes can be placed and triggered according to the beat. But what was going to be my source of input? As I mentioned before, the articles inspired me to make reality of air instruments. Daniel mentioned the leap motion controller during his lecture and luckily one of my teammates managed to get a hang of it. As a first experiment, I thought it would be very interesting to have various gestures triggering different samples, so that we could make our own beat (e.g. a drum pad).

While my teammates went to work on the leap motion controller, I focused on the drum machine. I created a number of sound samples, based on the song Still D.R.E by Dr. Dre, that we could use in our drum system. The song has a very simple and generic composition, thus I thought this would be an easy way for us to get started. For the drum machine I created a number of new rows with output. Although it took quite a lot of time because everything was hard-coded, I thought it was a fairly simple activity. If I had had more time this week I would have written a more variable code, which would allow for a bigger variety of samples.

After all of the sound samples were working correctly, we spend some time on making the connection between the Leap motion, Wekinator and the drum pad. Unfortunately, we were not able to train the machine according to our own input. I think this is a bug in the drum pad code, which only allows Wekinator to declare a randomized input. Even thought we were able to train the system and have it work with randomized beats (which sounded horribly), we were not happy with the result. We named it ‘The Drunken Dre’, as it was supposed to be sounding similar to his song, but most clearly did not.

As we had no clear solution in mind, we decided to Wizard of Oz the concept, in order to showcase the way we imagined training Wekinator for using our air drum pad. The hand gestures were not predefined, so they convey no meaning in the video below. The output of the drum pad did not change either, because it was obviously not linked to the input during the Wizard of Oz method. The idea however was to change the various chords by means of different gestures.

List of insights from experimenting

- There are already some experiments available that relay on gestures as musical input.

- The provided tools may discourage the ideas and coding for machine learning is rather complex. However, speculating without feeling limited by technologies can support in ideation and results can be generated through means of different prototyping methods.

Seminar (4th of December)

This week we had quite a different way of having a seminar then I had expected. Every individual had to deliver one or more question(s) about the literature. I thought of these question as a way to open up a discussion. Because there were two things that rather confused me from the articles, I wrote down the following:

- What opportunities does Kontrol, in combination with Guqin, yield? I did not really understand the purpose of this, as they concluded absolutely nothing for this combination of tools.

- What limitations could other instruments have when applying technology such as they did to Sansa? They only spoke about an instrument that is less commonly played then, for example, the piano or the guitar. I was wondering how the result would change if the authors had tried to implement the technologies into different instruments and was curious if people had thought of limitations for more commonly played instruments.

My questions were unfortunately not mentioned during the seminar and because of it’s rapid pace, I only got few important insights. Our group got the following question:

” How can one differentiate between sound-oriented tasks and movement oriented tasks? ”

Our answer was straight forward: “You pay attention to different motor levels. The different amount of parameters to complete a specific task differentiate the two.” We couldn’t really find anything interesting to add to these answers cause they seemed rather obvious. Two other group had very similar questions which opened up some discussion. I concluded the following:

The combination of sound and movement seems very interesting whereas movements can be applied to trigger certain sounds or vice versa. This could for example be used in sports, whereas it is already used in dance, but not in many different sports. According to Daniel, it was about creating different ways of giving feedback. This is very similar to what I concluded from the article myself and thus it gave me little new insights.

One other interesting question that came forth during the seminar was: ” Why do we need to put more technology into art?“. Art can use technology as a critical reflection. Technologies have always been involved with some sort of art. I think technology in art makes the art more interesting in general. An argument to be made is that you cannot separate art and technology, as it is part of our nature to combine them.

List of seminar notes:

- Differentiating between sound-and movement-oriented tasks is about creating different ways of giving feedback.

- Art can use technology as a critical reflection, whereas we can argue if we can separate the two due to our nature of combining them.

Further Experimentation (4th of December)

Because we finished the drum pad idea rather quickly on Tuesday, and because Daniel needed some time to look into our problem that occurred when training the Wekinator, I started experimenting with the code to make my ‘air-piano’ reality. Firstly, I created piano samples of each note for 7 octaves in Fruity Loops. This was rather time consuming, but having the samples ready is always a good thing. Secondly, I created a new row in the drum pad for each of the notes. Lastly, I made sure we only had one beat to work with, instead of 32. The idea behind this was that I wanted each finger, when using Leap Motion controller, to trigger a different note.

The problem with this was that the drum machine is meant to loop a beat on a certain tempo and thus will always trigger the note once it has been activated. In addition, if Daniel was not able to fix the problem that occurred with the drum machine, we were not even going to be able to trigger the notes individually. A kind of frustrating failure, as I spend quite some time on coding.

I had to come up with a better solution and Daniel advised me to look into the FM synth example. In this example, a synthesizer changes the sound depending on the position of your mouse in the canvas. I created a similar experience where notes of one octave will be played depending on the position of your mouse (see video below).

The next step for further experimenting would be to connect this processing sketch to the Wekinator system while using the leap-motion as an input source. In addition, if Daniel manages to fix our problem that occurred with the Wekinator, we might be able to get the input for the drum pad working tomorrow.

Quick notes

- Experimenting can be rather time consuming and it is best for me to think my idea through first and discuss it with the group.

- Try to make the prototype work with fewer samples at first instead of creating the entire idea in one go. This creates better opportunities for debugging.

Concluding our experiment (5th of December)

According to Daniel, it is not possible to take full control of the output, as it is in this case generated by Wekinator. It is however possible to adjust the sliders in order to produce a specific beat. After playing around with the slider for a while, we concluded that we were to make a better sounding beat then ‘The Drunken Dre’, but we were unable to adjust the sliders to play the exact song we had in mind.

I didn’t like to leave it there, so I started thinking about ways to improve the code. After looking into it, I discovered that there were certain float values being send to the Wekinator in order to have communication between the in-and output. These values could be adjusted in order to create a specific beat, but there was no beginning given the amount of samples we had used and the amount of time we had left. We therefore decided to leave it as the less perfect beat and focus on reflecting in preparation of the presentation.

I personally think Wekinator is a great tool for rapid prototyping through machine learning. It enables easy communication between different forms of interesting inputs (e.g. the leap motion controller, human computer vision) and outputs (e.g. sounds, text, drawings).

I think a downfall of Wekinator is that it is limited in its use due to the lack of user control in terms of output. For example, we wanted to train the model to a specific output that was generated through processing, but Wekinator only allowed its own input to be in control of the Processing drum sketch.

On the other hand, I feel like there was too little time to discover all the possibilities that Wekinator enfolds, thus my experience can differ from my peers. I do however think that this tool has more to offer than the tools we used in the previous course, because it allows for easy communication between multiple sketches, whereas the tools used in the previous course were very limited in what they could do within a limited amount of time.

I think we succeeded in making noise, although it was far from what I wanted it to be. Unfortunately, I had no time left to continue working on the air piano and I might play around with this in the near future, because this seems like something I am very interested in.

Concluding thoughts

- Wekinator did not allow us to have full control of our output, thus I think we should have explored its possibilities in advance.

- I think Wekinator is a great tool for rapid prototyping through machine learning, as it allows for easy communication between multiple sketches for both in and output.

- There was too little time do discover all the possibilities that Wekinator enfolds.

Presentations (6th of December)

Other then the previous weeks, the round of presentations started with a demo session of each project. I quickly noticed that the other groups that had used the leap-motion controller had a sort of similar idea to what we had in mind. I personally thought Simon’s synthesizer sounded way better, because they used simple sound samples that were not linked to a specific song. As a result, the instrument sounded better when given random input, which made the experience more pleasant and not as disturbing as ours. This project was more in line with the way I had imagined the air piano would work out. They ran into the same problems that we had encountered, thus better exploration of Wekinator is necessary to get a hold of its strengths.

A number of different groups had made use of physical artifacts, which gave me the feeling that we have been slacking this week, even though we had put in a lot of work. I think the strength of having a physical artifact is the tangibility that was missing in our project. When playing with the leap motion, I was missing the magical feeling of changing sounds without touching anything. When for example, interacting with the plant from Julija’s group, I got that magical feeling for some reason. This confirms, as previously described in the literature, that a musician handles an instrument in a unique way. I think it is not just about the music, but also about playing (i.e. touching and feeling) the instrument. I am thus far not convinced that the air piano is a strong concept.

In terms of feedback on our presentation, Daniel mentioned that the mental model we had in mind was most likely different then the mental model of the creator of the tool. This could have been the reason why we ran into a wall. In further design practices, we should look at constraints as opportunities to figure out the full strength of a tool.

A final note for this week

- Better exploration of Wekinator is necessary to get a hold of its strengths. It is good to compare one’s own mental model to the model of the creator of the tool. Exploring the limitations and opportunities that a tool entails can be beneficial in exploiting a tools strengths.

Literature

Bevilacqua, F., Boyer, E. O., Françoise, J., Houix, O., Susini, P., Roby-Brami, A., & Hanneton, S. (2016). Sensori-Motor Learning with Movement Sonification: Perspectives from Recent Interdisciplinary Studies. Frontiers in Neuroscience, 10. https://doi.org/10.3389/fnins.2016.00385

Christopher, K.R., He, J., Kapur, R., & Kapur, A. (2013). Kontrol: Hand Gesture Recognition for Music and Dance Interaction. NIME.

Macionis, M.J., & Kapur, A. (2018). Sansa: A Modified Sansula for Extended Compositional Techniques Using Machine Learning. NIME.